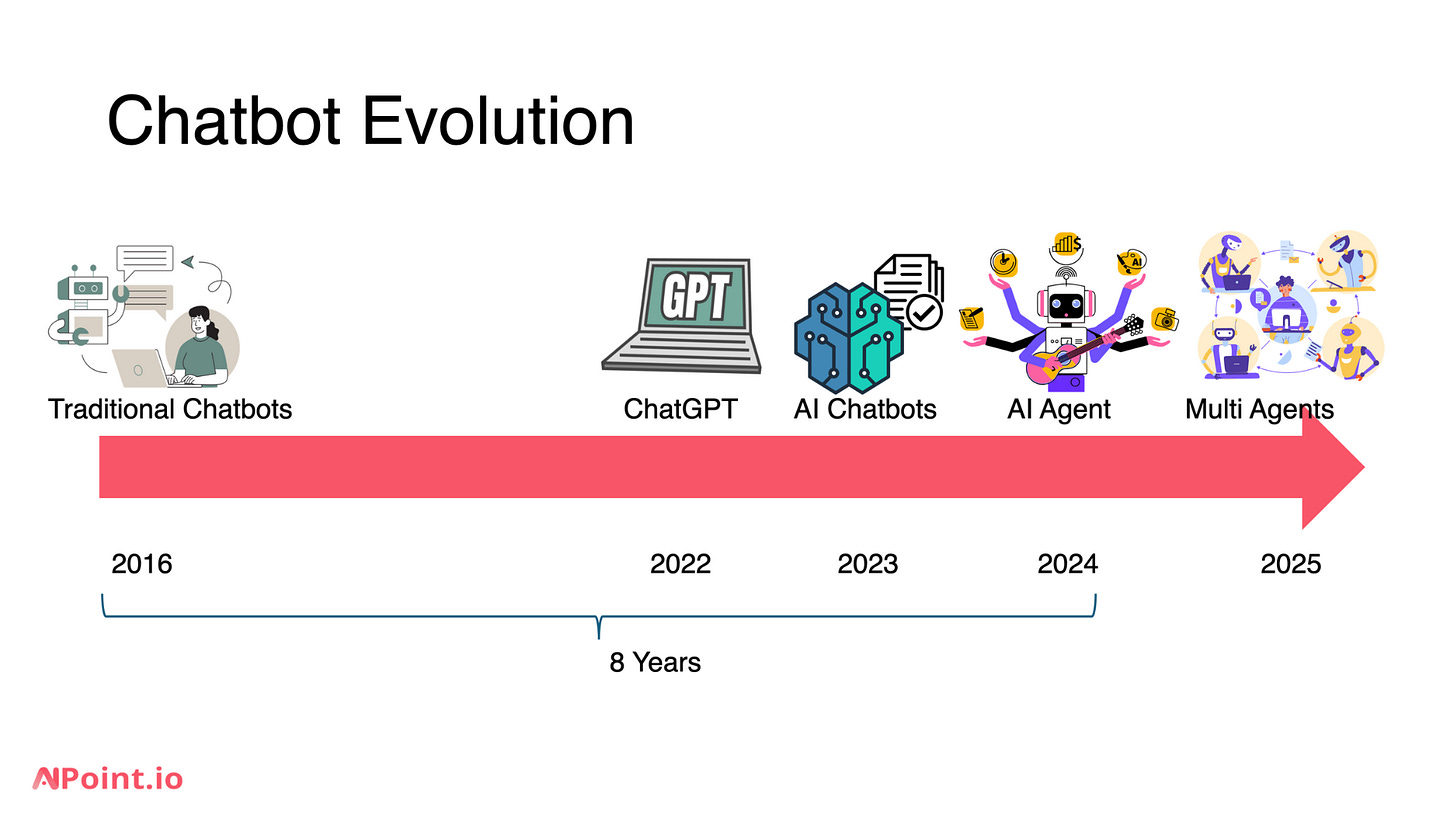

The Evolution of Chatbots: From Traditional Chatbots to AI Agents

This is the written version of my talk at GEEQ meetup in Sydney, Australia on 5th December 2024. I tried to write down as much as I remembered for anyone who is keen to learn about Chatbots and beyond.

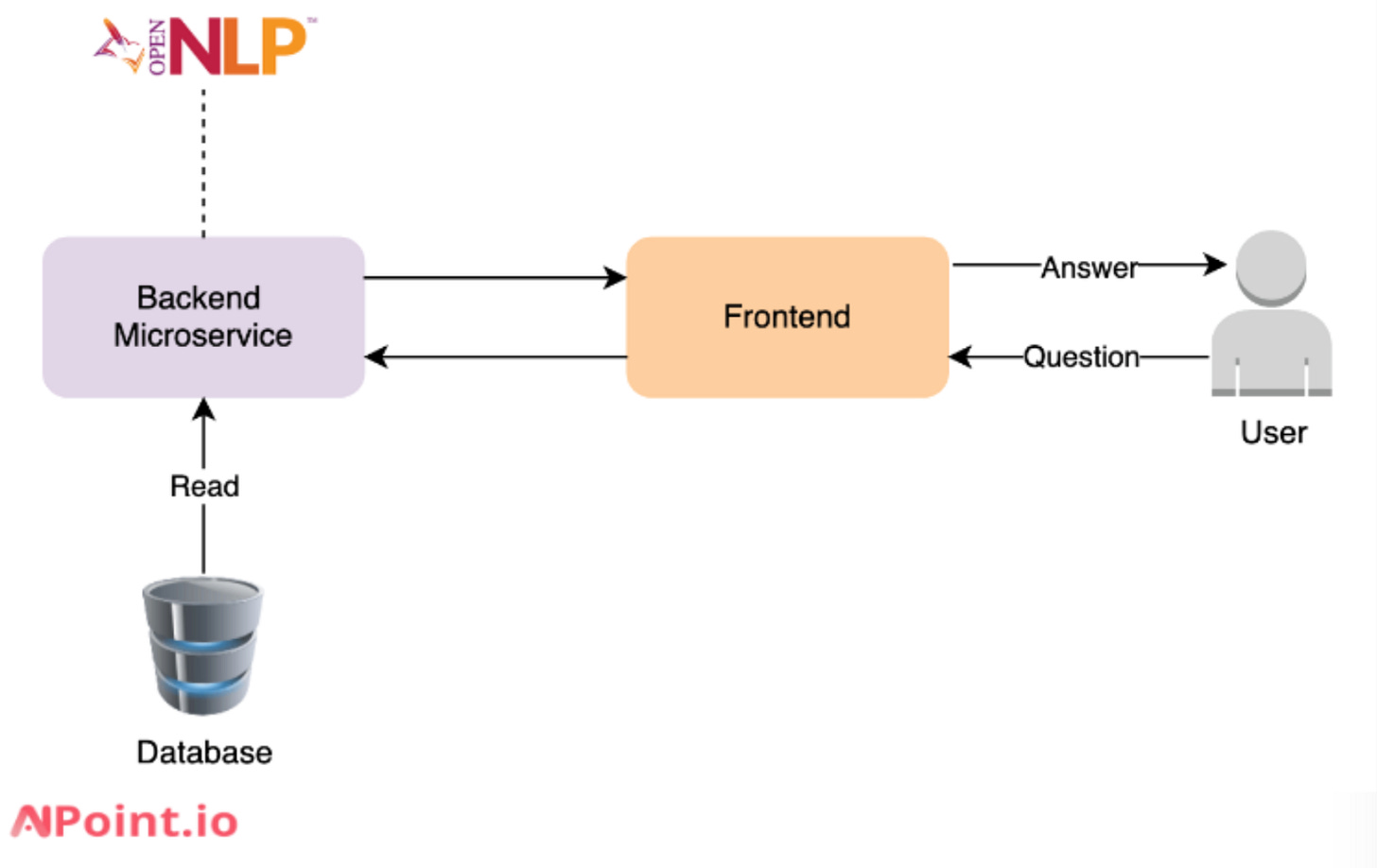

In 2015-2016, chatbots were the new trend in tech. Considering the state of tech, we can call them traditional chatbots. During that time, I built a customer support chatbot for Gumtree Australia (part of eBay Classifieds Group). I used Apache NLP and Java to develop a microservice-based chatbot and trained the model with customer support data pulled from Gumtree’s data warehouse.

Here’s how the chatbot worked: the chatbot broke down customer enquiries using NLP techniques like tokenization, part-of-speech tagging, and parsing. It then selected the best possible response based on the highest confidence score. The training data focused on four key categories: SCAM, SMS Fraud, Report Ads, and Accounts which were common support issues on the platform.

The architecture was straightforward: a backend and a frontend microservice, a datastore for storing the training models. The chatbot was rule-based and limited to specific use cases. But even with these limitations, chatbots started gaining traction. Why? Because they solved real business problems.

Why Chatbots Became Popular

Here’s what made traditional chatbots popular:

24/7 Availability: Customers could receive support at any time, no matter the timezone.

Cost Efficiency: Companies could reduce their customer support team size by delegating repetitive inquiries to chatbots.

Scalability: Unlike humans, chatbots can handle multiple conversations simultaneously, especially during peak times.

Faster Responses: No more waiting for a consultant—chatbots offer instant replies.

Easy Integration: Chatbots could be integrated into any platform such as Facebook Messenger, WhatsApp, or Slack.

But there were limitations to traditional chatbots:

Static Rules: They could only respond to the static rules they were trained with.

Limited Understanding: Due to the basic NLP capabilities and static rules, chatbots could provide incorrect responses.

The Rise of ChatGPT and Large Language Models

In 2022, ChatGPT was released and suddenly everything changed—not just for chatbots, but in how we solve problems with technology.

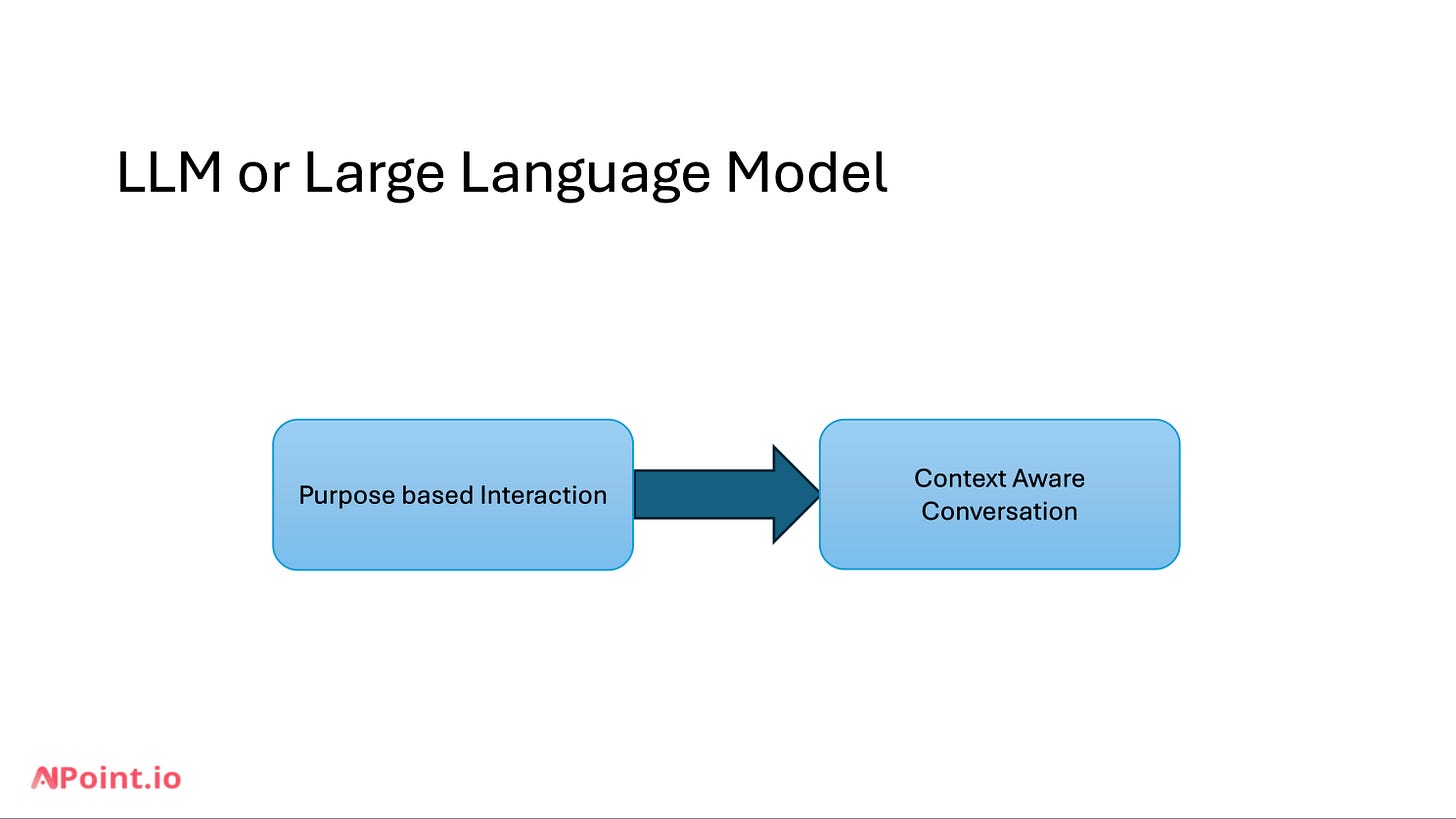

ChatGPT introduced the concept of Large Language Models (LLMs). Unlike traditional chatbots, LLMs don’t rely on static rules. Instead, they are pre-trained on massive datasets and leverage neural network algorithms to understand language at a much deeper level. With millions of parameters, they can generate dynamic responses. Therefore, chatbots moved from purpose based interaction to context aware conversations.

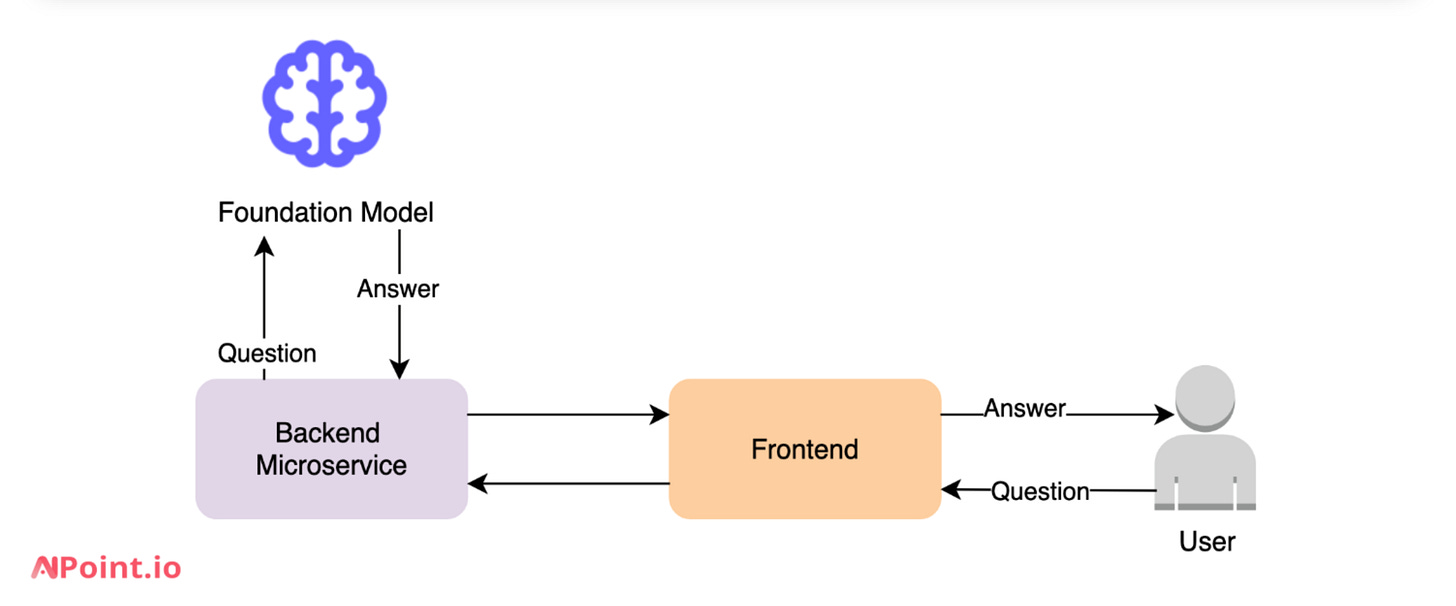

This was a game changer for chatbots, they were no longer limited to answering FAQs—they could now generate new content, and understand complex inquiries from customers. As ChatGPT became more and more popular, an interest arose in how to leverage chatGPT in various business use cases. Here is a simple technical architecture demonstrating on how to leverage foundation model in a chatbot service to allow ChatGPT like chatbot.

Domain-Specific Chatbots using Fine-Tuning and RAG

While LLMs have a big advantage over NLP, they lack domain-specific knowledge. So, it was important to utilize LLMs for a specific industry.

Because of that two key techniques emerged:

Fine-Tuning

RAG (Retrieval-Augmented Generation)

Now, let’s break them down each here.

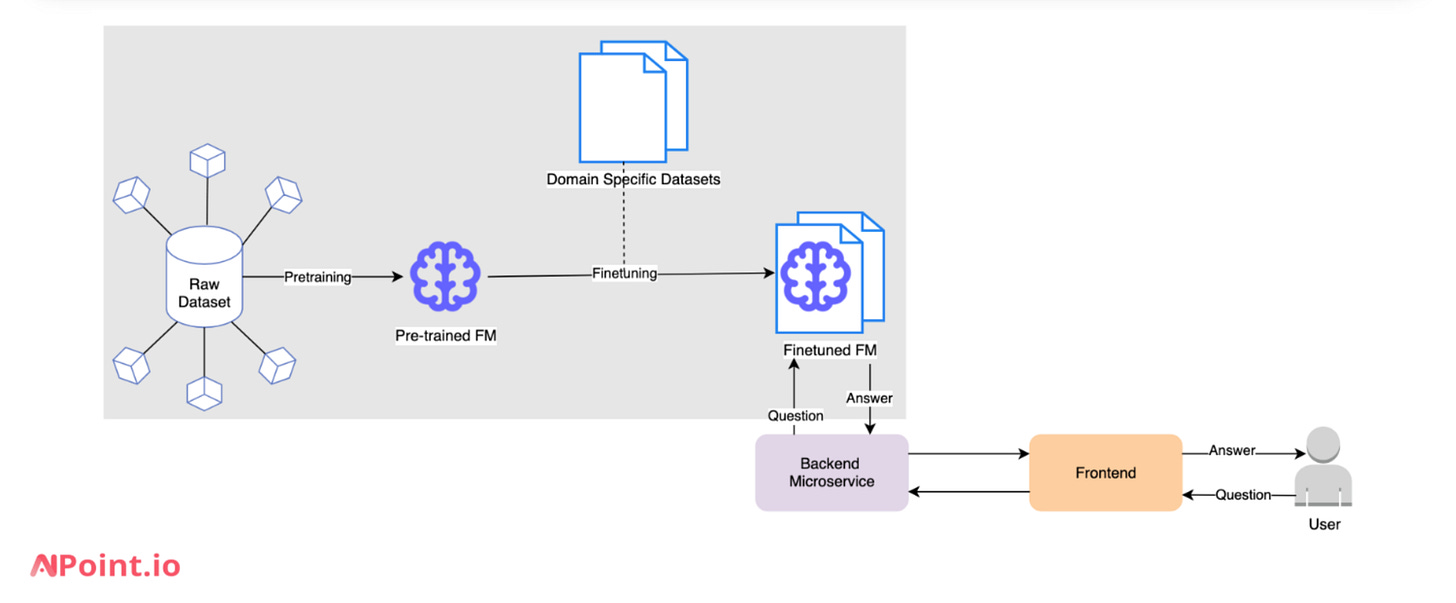

Fine-Tuning

Fine-tuning involves training an existing LLM with domain-specific datasets. For example, a legal chatbot might be fine-tuned using legal documents, allowing the chatbot to provide accurate, context-aware responses to legal inquiries. The following diagram demonstrate how fine tuning can be implemented for an AI Chatbot.

Benefits:

Customization for specific industries (e.g., healthcare, legal, customer support).

Improved response quality within a specific domain and industry.

Challenges:

Frequent model training is required to keep up with industry updates or regulatory changes.

Costly due to repeated model training performed by the tech team

The risk of hallucination in case of using massive datasets (i.e., generating inaccurate or irrelevant information).

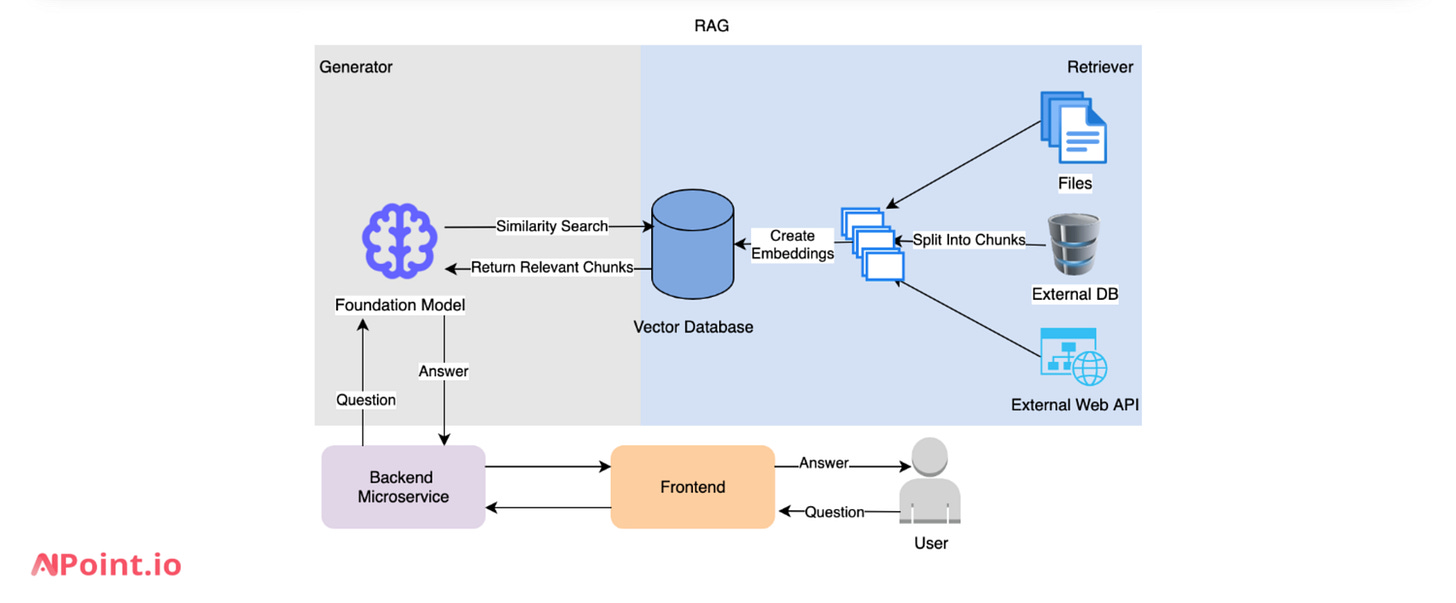

2. Retrieval-Augmented Generation (RAG)

RAG takes a different approach. Instead of relying only on pre-trained knowledge, it dynamically fetches information from external data sources. RAG architecture is based on a retriever and a generator. Here’s how RAG works:

The retriever pulls relevant data from sources similar to databases, documents, or websites.

The generator uses that data to craft accurate, up-to-date responses.

Benefits:

Access to dynamic, real-time knowledge.

No need for repeated model training.

Cost-effective and scalable.

Challenges:

Potential latency due to fetching external data.

Data quality and risk of biases.

Security and privacy concerns when accessing sensitive data.

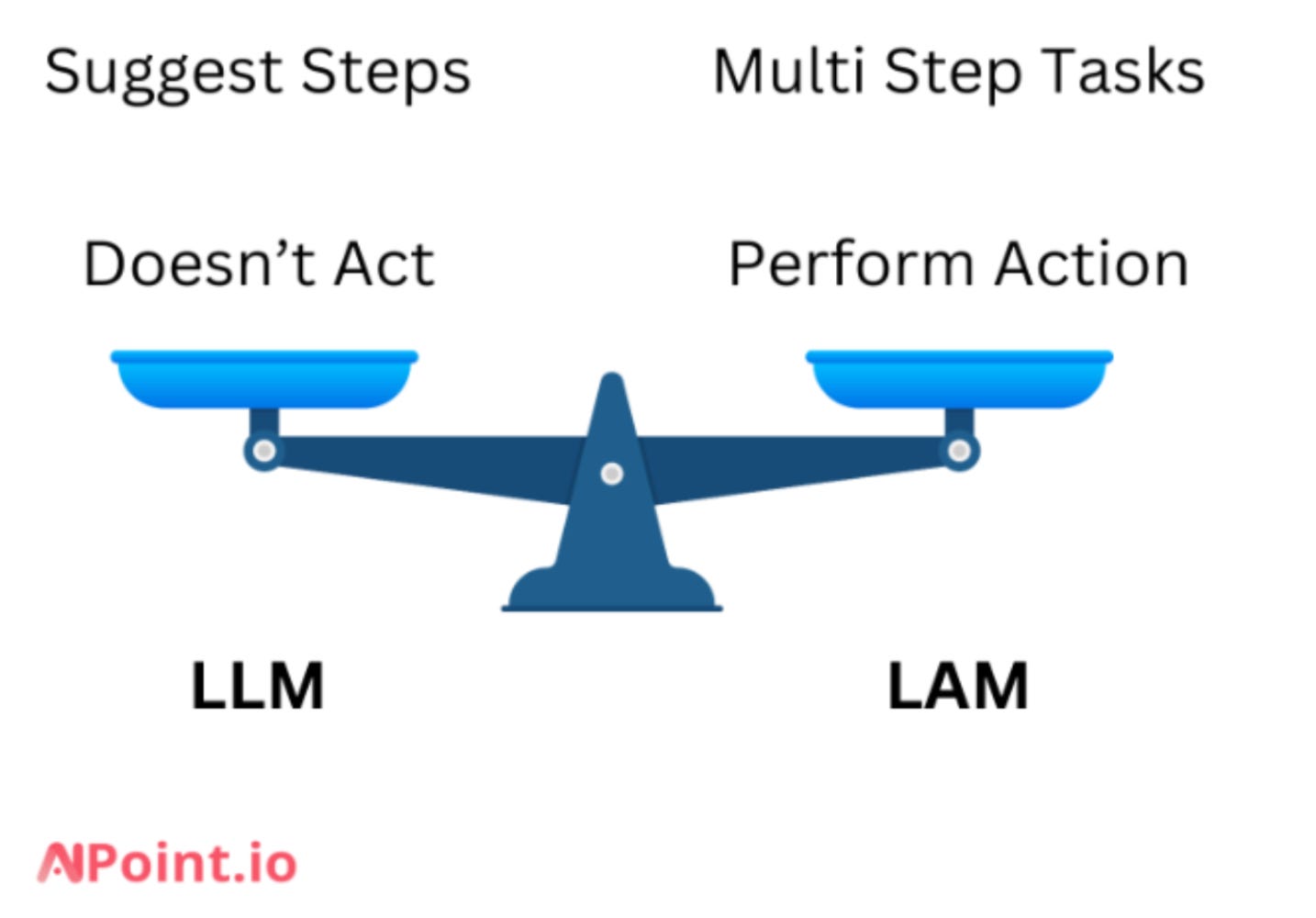

AI Agents: Chatbots who act

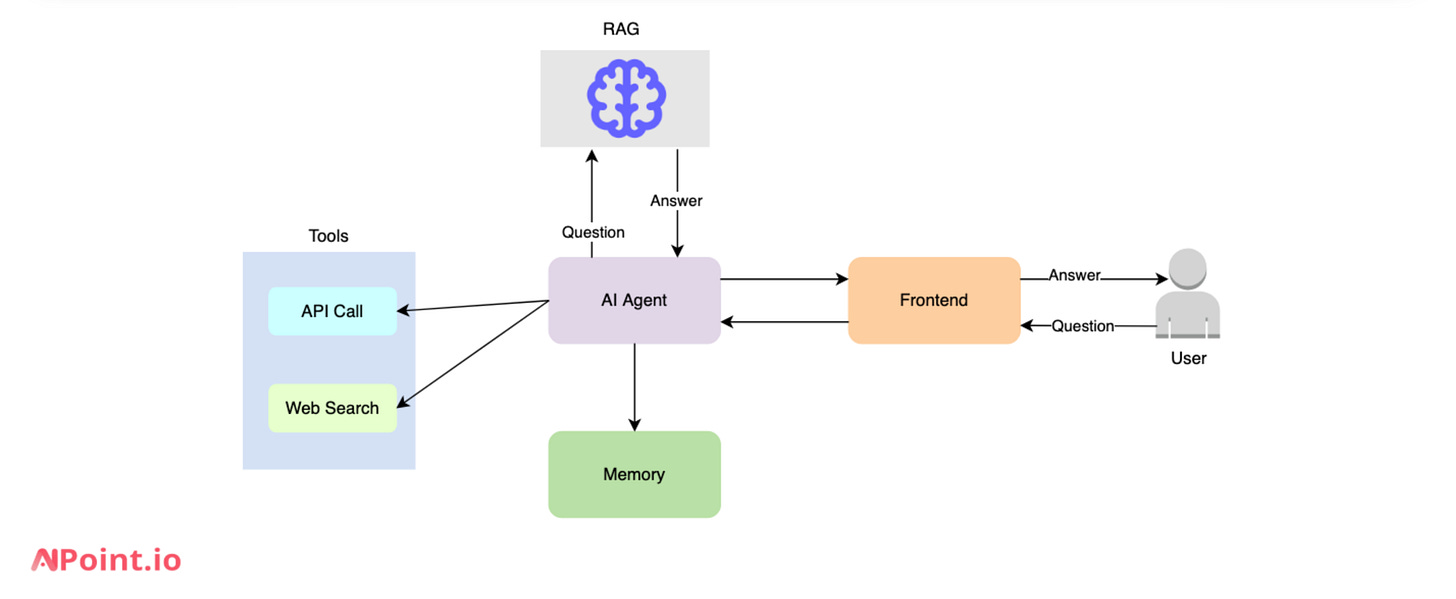

The idea of AI agents is to give chatbots a more autonomous role. Chatbots don’t just respond to prompts; they set goals, execute tasks, and handle unexpected scenarios. For example, in customer service, an AI agent could process a refund, send a confirmation email, and schedule follow-ups—all without additional commands.

AI agents leverage Language-Aware Models (LAMs), which allow them to perform actions based on the initial command or prompt. They can also integrate with external tools and systems to complete tasks autonomously.

Use Cases for AI Agents

AI agents are already getting popular in industries like:

Customer Service: Automating refunds and booking appointments.

Sales and Marketing: Lead generation, email campaigns, and personalized outreach.

Travel: Managing itineraries and booking services.

But as with any technology, there are challenges:

Complex Implementation: Building AI agents requires more complex infrastructure in comparison to traditional chatbots.

Ethical and Security Concerns: Due to access to external data sources, AI Agents must be designed carefully to prevent unintended actions or data breaches.

Experimental State: We’re still learning how to best apply AI agents to different business scenarios. Therefore, the community is relatively small at the time of writing this article.

What’s Next? Multi-Agent Infrastructure

The future lies in multi-agent systems, where AI agents collaborate to handle complex processes. For example, one agent might manage customer interactions while another focuses on backend workflows—all working together seamlessly.

It’s fascinating to think how quickly the conversational AI landscape has evolved. Eight years ago, people avoided chatbots. Today, millions of people interact with ChatGPT for everything from advice to automation. The possibilities of automation are endless, but it’s important for businesses to approach this evolution thoughtfully.

Advice for Businesses

Start Small: Begin with an AI chatbot before scaling to AI agents. Treat this as a journey, not a destination.

Focus on Specific Use Cases: Think about how AI can enhance context awareness or personalization in your offering.

Invest in Training: Equip your teams with AI and ML skills to stay competitive. Every software engineer should learn the AI fundamentals.

Test and Iterate: Start with small wins to minimize risks while exploring long-term opportunities.

The evolution of chatbots shows how technology simplifies user experiences while introducing new complexities behind the scenes. It’s an exciting time to innovate—so let’s build wisely.